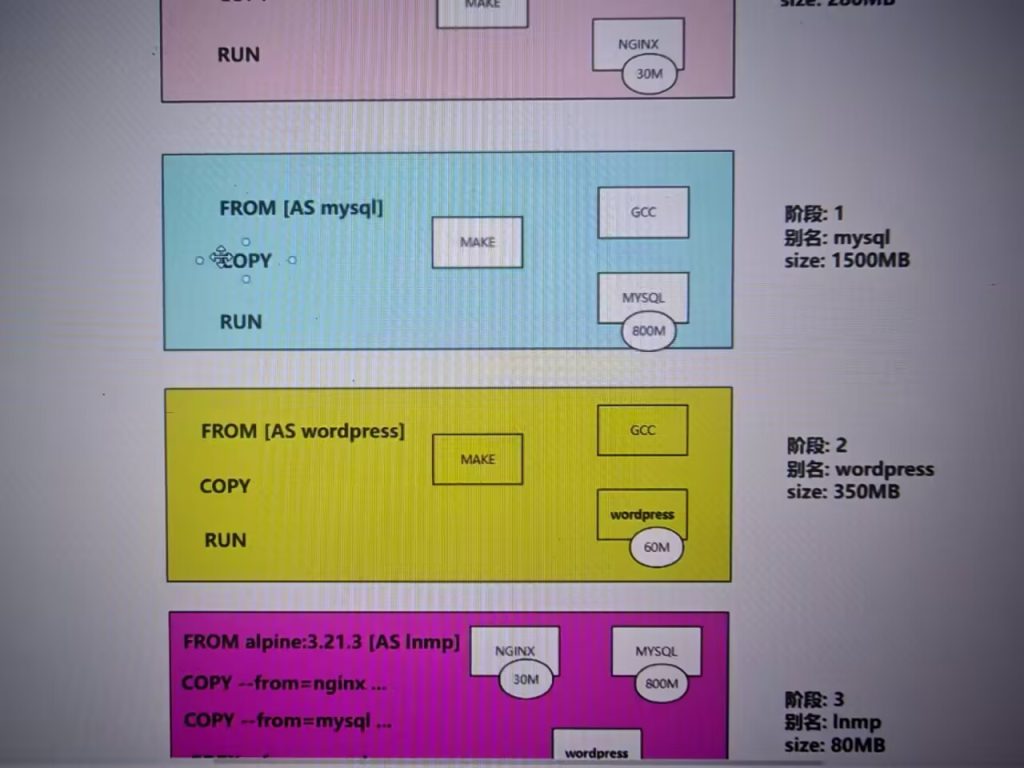

多阶段、多FROM。构建游戏镜像案例

1. 准备 Dockerfile 文件

多个FROM,多层镜像,可以引用上面镜像的文件内容

比如编译安装,不需要gcc g++ make 等软件,占用镜像大小。就可以下面镜像只要上面镜像编译好的服务,达到缩减大小

FROM alpine:3.21.3 AS myweb

RUN sed -i 's#dl-cdn.alpinelinux.org#mirrors.aliyun.com#' /etc/apk/repositories && \

apk update && apk add openssh-server curl wget gcc g++ make && \

wget "https://sourceforge.net/projects/pcre/files/pcre/8.44/pcre-8.44.tar.gz" && \

wget "http://nginx.org/download/nginx-1.20.2.tar.gz" && \

tar xf pcre-8.44.tar.gz && \

tar xf nginx-1.20.2.tar.gz && \

rm -f nginx-1.20.2.tar.gz pcre-8.44.tar.gz && \

cd nginx-1.20.2 && \

./configure --prefix=/usr/local/nginx --with-pcre=/pcre-8.44 --without-http_gzip_module && \

make && \

make install

CMD ["tail","-f","/etc/hosts"]

FROM alpine:3.21.3 AS apps

MAINTAINER com-linux

LABEL school=com \

class=linux \

auther=jasonhao \

address="haoshuaicong"

# 从哪个FROM阶段拷贝,可以指定别名,如果未使用AS关键字定义别名,则可以用索引下标。

COPY --from=myweb /usr/local/nginx /usr/local/nginx

RUN sed -i 's#dl-cdn.alpinelinux.org#mirrors.aliyun.com#' /etc/apk/repositories && \

apk update && apk add openssh-server curl && \

sed -i 's@#PermitRootLogin prohibit-password@PermitRootLogin yes@g' /etc/ssh/sshd_config && \

ln -sv /usr/local/nginx/sbin/nginx /usr/sbin && \

rm -rf /var/cache/ && \

ssh-keygen -A

WORKDIR /com-nginx-1.20.2/conf

HEALTHCHECK --interval=3s \

--timeout=3s \

--start-period=30s \

--retries=3 \

CMD curl -f http://localhost/ || exit 1

ADD com-killbird.tar.gz /usr/local/nginx/html

COPY start.sh /

EXPOSE 80 22

CMD ["sh","-x","/start.sh"]

# CMD ["tail","-f","/etc/hosts"]

2. 准备启动脚本 start.sh

#!/bin/sh

# 定义root初始化密码

if [ -n "$1" ]

then

echo root:$1| chpasswd

elif [ -n "$com_ADMIN" ]

then

echo root:${com_ADMIN} | chpasswd

else

echo root:123456 | chpasswd

fi

# 启动sshd服务

/usr/sbin/sshd

# 启动nginx服务

/usr/local/nginx/sbin/nginx -g "daemon off;"3. 开始编译

[root@elk92 multiple-step-build]# ll

total 916

drwxr-xr-x 2 root root 4096 Mar 24 10:03 ./

drwxr-xr-x 4 root root 4096 Mar 24 09:08 ../

-rw-r--r-- 1 root root 1679 Mar 24 10:03 Dockerfile

-rw-r--r-- 1 root root 918998 Mar 24 10:03 com-killbird.tar.gz

-rw-r--r-- 1 root root 313 Mar 24 10:00 start.sh

[root@elk92 multiple-step-build]#

[root@elk92 multiple-step-build]#

[root@elk92 multiple-step-build]# build -t demo:v1.8 .

[root@elk92 multiple-step-build]#

[root@elk92 multiple-step-build]# docker history demo:v1.8

# 使用docker history xx镜像 查看构建命令,排除每一步所占的大小4. 测试访问

[root@elk92 ~]# run -d --name games -p 81:80 demo:v1.8

8884d2c8f22430b985d47f4b7136c9f968fe28e0512572aee2b93570556b5995编译忽略文件 .dockerignore

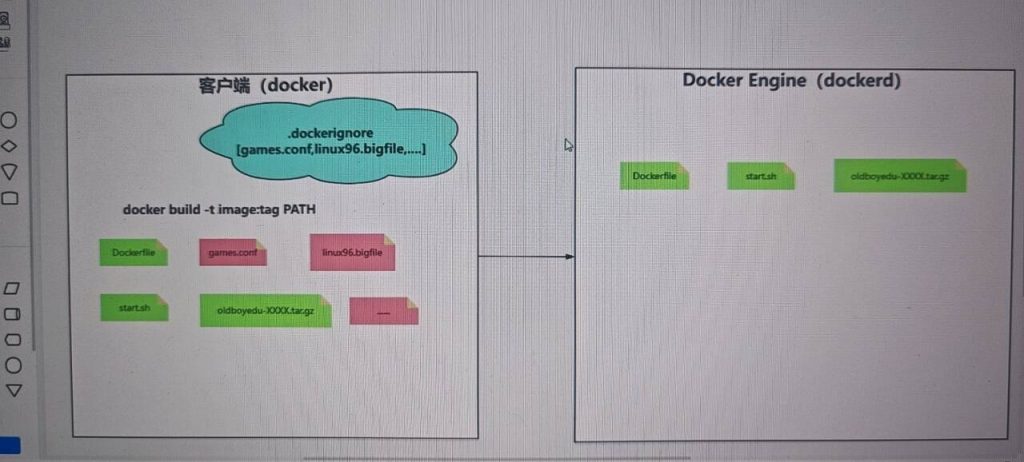

1. Docker 编译镜像流程

- 1.1 加载本地文件,这些文件不能在

.dockerignore内容中。 - 1.2 发送文件到 Docker daemon 守护进程。

- 1.3 Docker daemon 守护进程开始编译镜像。

2. 使用 .dockerignore 忽略不必要的文件

比如这两个文件,2个G,但是编译不需要这两个,但是编译的时候,会加载当前目录的所有文件,速度就慢了

所有编写.dockerignore 忽略,dockerfile编译不需要的文件

[root@elk92 multiple-step-build]# docker build -t demo:v1.9 .

Sending build context to Docker daemon 2.1G

...

[root@elk92 multiple-step-build]# ll -h

total 2.1G

drwxr-xr-x 2 root root 4.0K Mar 24 11:02 ./

drwxr-xr-x 4 root root 4.0K Mar 24 09:08 ../

-rw-r--r-- 1 root root 20M Mar 24 10:49 11111111111111111111

-rw-r--r-- 1 root root 1.7K Mar 24 10:03 Dockerfile

-rw-r--r-- 1 root root 30 Mar 24 11:02 .dockerignore

-rw-r--r-- 1 root root 1.0G Mar 24 10:57 linux-002.bigfile

-rw-r--r-- 1 root root 1.0G Mar 24 10:57 linux.bigfile

-rw-r--r-- 1 root root 898K Mar 24 10:03 com-killbird.tar.gz

-rw-r--r-- 1 root root 313 Mar 24 10:00 start.sh

[root@elk92 multiple-step-build]#

[root@elk92 multiple-step-build]# cat .dockerignore

linux*

11111111111111111111

[root@elk92 multiple-step-build]#

[root@elk92 multiple-step-build]# docker build -t demo:v1.9 .

Sending build context to Docker daemon 925.2kB

...

3. 添加测试文件

[root@elk92 multiple-step-build]# dd if=/dev/zero of=linux.bigfile count=1024 bs=1MDockerfile 的优化思路

Dockerfile 的优化原则简而言之为:”构建的镜像尽可能小,构建速度尽可能快”。

编译速度快

- 1) 将不经常变更的指令放在靠前位置,频繁修改的指令靠后写,从而达到可以充分利用缓存镜像的目的。

- 2) 在不影响功能的前提下,尽可能合并多条指令,减少镜像的层数,从而减少中间容器的启动。(docker image ls -a )

- 3) 使用

.dockerignore来忽略不需要发送给 Docker daemon 进程的文件。 - 4) 修改软件源地址,比如 yum,apt,apk 源建议使用国内的,可以明显看出来速度的提升。

镜像体积小

- 1) 删除缓存文件,比如

rm -rf /var/cache/yum。 - 2) 卸载无用的软件包,比如编译安装后的编译器,下载的软件包等都可以被卸载。

- 3) 使用较小的基础镜像,比如 alpine。

- 4) 多阶段构建技术,也可以达到减少软件包的大小。

Docker compose 单机编排快速入门

1. 什么是 Docker compose

Docker compose 是用于管理一组容器的工具。如果一个服务有多个容器(比如集群),就可以使用多个服务来定义,它还支持存储卷和自定义网络。

就是,一个compose调用启动多个容器。单机部署多个容器

推荐阅读:https://docs.docker.com/reference/compose-file/

2. 编写配置示例

[root@elk92 zabbix]# cat docker-compose.yml

version: '3.3'

# 服务名称

services:

# 自定义服务的名称

mysql-server:

# 定义镜像的名称

image: mysql:8.0.36-oracle

# 指的重启策略

restart: always

# 指定环境变量

environment:

MYSQL_ROOT_PASSWORD: root_pwd

MYSQL_DATABASE: zabbix

MYSQL_USER: zabbix

MYSQL_PASSWORD: zabbix_pwd

# 指定自定义网络

networks:

- zabbix-net

# 指定启动命令

command: ["--character-set-server=utf8", "--collation-server=utf8_bin", "--default-authentication-plugin=mysql_native_password"]

zabbix-java-gateway:

image: zabbix/zabbix-java-gateway:alpine-7.2-latest

restart: always

networks:

- zabbix-net

zabbix-server:

# 指定服务的依赖关系,依赖于mysql-server这个服务,也就是说该服务必须优先当前服务启动

depends_on:

- mysql-server

image: zabbix/zabbix-server-mysql:alpine-7.2-latest

restart: always

environment:

DB_SERVER_HOST: mysql-server

MYSQL_DATABASE: zabbix

MYSQL_USER: zabbix

MYSQL_PASSWORD: zabbix_pwd

MYSQL_ROOT_PASSWORD: root_pwd

ZBX_JAVAGATEWAY: zabbix-java-gateway

networks:

- zabbix-net

# 指定端口映射

ports:

- "10051:10051"

zabbix-web-nginx-mysql:

depends_on:

- zabbix-server

image: zabbix/zabbix-web-nginx-mysql:alpine-7.2-latest

ports:

# - "80:8080"

- "90:8080"

restart: always

environment:

DB_SERVER_HOST: mysql-server

MYSQL_DATABASE: zabbix

MYSQL_USER: zabbix

MYSQL_PASSWORD: zabbix_pwd

MYSQL_ROOT_PASSWORD: root_pwd

networks:

- zabbix-net

volumes:

- xixi:/com-xixi

- haha:/com-haha

# 定义网卡相关的信息

networks:

zabbix-net:

# name: com-zabbix # 官方是3.5版本才支持的.但yum安装的话是1.18.0的docker-compose,最高支持3.3版本哟~

ipam:

driver: default

config:

- subnet: 172.30.100.0/24

# gateway: 172.30.100.254 # 对于gateway参数目前仅有"version 2"才支持哟~

# 定义存储卷

volumes:

xixi:

haha:3. 启动服务

[root@elk92 zabbix]# docker-compose up -d # 启动服务,创建并启动容器

[+] Building 0.0s (0/0) docker:default

[+] Running 5/5

✔ Network zabbix_zabbix-net Created 0.1s

✔ Container zabbix-mysql-server-1 Started 0.0s

✔ Container zabbix-zabbix-java-gateway-1 Started 0.0s

✔ Container zabbix-zabbix-server-1 Started 0.0s

✔ Container zabbix-zabbix-web-nginx-mysql-1 Started 0.0s

[root@elk92 zabbix]#

[root@elk92 zabbix]# docker-compose ps -a # 查看服务相关的状态,只显示compose的容器

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

zabbix-mysql-server-1 mysql:8.0.36-oracle "docker-entrypoint.s…" mysql-server 2 minutes ago Up 2 minutes 3306/tcp, 33060/tcp

zabbix-zabbix-java-gateway-1 zabbix/zabbix-java-gateway:alpine-7.2-latest "docker-entrypoint.s…" zabbix-java-gateway 2 minutes ago Up 2 minutes 10052/tcp

zabbix-zabbix-server-1 zabbix/zabbix-server-mysql:alpine-7.2-latest "/usr/bin/docker-ent…" zabbix-server 2 minutes ago Up 2 minutes 0.0.0.0:10051->10051/tcp, :::10051->10051/tcp

zabbix-zabbix-web-nginx-mysql-1 zabbix/zabbix-web-nginx-mysql:alpine-7.2-latest "docker-entrypoint.sh" zabbix-web-nginx-mysql 2 minutes ago Up 2 minutes (healthy) 8443/tcp, 0.0.0.0:90->8080/tcp, :::90->8080/tcp

[root@elk92 zabbix]# docker-compose stop

[root@elk92 zabbix]# docker-compose start

[root@elk92 zabbix]# docker-compose --help # 需要的话,可以看看4. 访问测试

可以通过 http://10.0.0.92:90/ 进行访问zabbix测试。

5. 停止服务并删除容器

[root@elk92 zabbix]# docker-compose down -t 0

[+] Running 5/5

✔ Container zabbix-zabbix-java-gateway-1 Removed 0.2s

✔ Container zabbix-zabbix-web-nginx-mysql-1 Removed 0.3s

✔ Container zabbix-zabbix-server-1 Removed 0.2s

✔ Container zabbix-mysql-server-1 Removed 0.2s

✔ Network zabbix_zabbix-net Removed 0.2s

[root@elk92 zabbix]#

[root@elk92 zabbix]# docker-compose ps -a

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

[root@elk92 zabbix]# # 以为定义了存储卷,所以数据还在

[root@elk92 ~]# docker volume inspect zabbix_xixi

[

{

"CreatedAt": "2025-03-24T12:00:02+08:00",

"Driver": "local",

"Labels": {

"com.docker.compose.project": "zabbix",

"com.docker.compose.version": "2.23.0",

"com.docker.compose.volume": "xixi"

},

"Mountpoint": "/var/lib/docker/volumes/zabbix_xixi/_data",

"Name": "zabbix_xixi",

"Options": null,

"Scope": "local"

}

]

[root@elk92 ~]# docker volume inspect zabbix_haha

[

{

"CreatedAt": "2025-03-24T12:00:02+08:00",

"Driver": "local",

"Labels": {

"com.docker.compose.project": "zabbix",

"com.docker.compose.version": "2.23.0",

"com.docker.compose.volume": "haha"

},

"Mountpoint": "/var/lib/docker/volumes/zabbix_haha/_data",

"Name": "zabbix_haha",

"Options": null,

"Scope": "local"

}

]

[root@elk92 ~]# docker-compose 实现 wordpress 环境部署

1. 编写配置示例

docker-compose.yml

version: '3.8'

services:

db:

image: mysql:8.0.36-oracle

container_name: db

restart: unless-stopped

environment:

- MYSQL_DATABASE=wordpress

- MYSQL_ALLOW_EMPTY_PASSWORD=yes

- MYSQL_USER=admin

- MYSQL_PASSWORD=yinzhengjie

# 定义存储卷

volumes:

- dbdata:/var/lib/mysql

networks:

- wordpress-network

wordpress:

depends_on:

- db

image: wordpress:6.7.1-php8.1-apache

container_name: wordpress

restart: unless-stopped

ports:

- "100:80"

environment:

- WORDPRESS_DB_HOST=db:3306

- WORDPRESS_DB_USER=admin

- WORDPRESS_DB_PASSWORD=yinzhengjie

- WORDPRESS_DB_NAME=wordpress

volumes:

- wordpress:/var/www/html

networks:

- wordpress-network

# 定义存储卷,一个叫wordpress一个叫dbdata ,然后服务区块就可以引用(比如 - wordpress:/var/www/html)

volumes:

wordpress:

dbdata:

networks:

wordpress-network:

driver: bridge

ipam:

config:

- subnet: 172.31.100.0/242. 启动 wordpress

[root@elk92 wordpress]# docker-compose up -d

[+] Building 0.0s (0/0) docker:default

[+] Running 5/5

✔ Network wordpress_wordpress-network Created 0.1s

✔ Volume "wordpress_dbdata" Created 0.0s

✔ Volume "wordpress_wordpress" Created 0.0s

✔ Container db Started 0.0s

✔ Container wordpress Started 0.0s

[root@elk92 wordpress]#

[root@elk92 wordpress]# docker-compose ps -a

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

db mysql:8.0.36-oracle "docker-entrypoint.s…" db 4 seconds ago Up 3 seconds 3306/tcp, 33060/tcp

wordpress wordpress:6.7.1-php8.1-apache "docker-entrypoint.s…" wordpress 4 seconds ago Up 2 seconds 0.0.0.0:100->80/tcp, :::100->80/tcp3. 测试验证

http://10.0.0.92:100/使用 Docker Compose 一键部署 ES 集群

参考www.elastic.co 官方部署教程

docker-compose.yml 里的变量,从.env文件取,所以简单的配置只需要配置env变量文件即可

1. 配置环境变量文件 .env

# Password for the 'elastic' user (at least 6 characters)

ELASTIC_PASSWORD=123456

# Password for the 'kibana_system' user (at least 6 characters)

KIBANA_PASSWORD=123456

# Version of Elastic products

# STACK_VERSION={version}

STACK_VERSION=8.17.3

# Set the cluster name

# CLUSTER_NAME=docker-cluster

CLUSTER_NAME=docker-com-es8

# Set to 'basic' or 'trial' to automatically start the 30-day trial

LICENSE=basic

#LICENSE=trial

# Port to expose Elasticsearch HTTP API to the host

# ES_PORT=9200

ES_PORT=19200

#ES_PORT=127.0.0.1:9200

# Port to expose Kibana to the host

KIBANA_PORT=5601

KIBANA_I18N_LOCALE=zh-CN

#KIBANA_PORT=80

# Increase or decrease based on the available host memory (in bytes)

MEM_LIMIT=1073741824

# Project namespace (defaults to the current folder name if not set)

#COMPOSE_PROJECT_NAME=myproject2. 编写 Docker Compose 文件 docker-compose.yaml

version: "2.2"

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

user: "0"

command: >

bash -c '

if [ x${ELASTIC_PASSWORD} == x ]; then

echo "Set the ELASTIC_PASSWORD environment variable in the .env file";

exit 1;

elif [ x${KIBANA_PASSWORD} == x ]; then

echo "Set the KIBANA_PASSWORD environment variable in the .env file";

exit 1;

fi;

if [ ! -f config/certs/ca.zip ]; then

echo "Creating CA";

bin/elasticsearch-certutil ca --silent --pem -out config/certs/ca.zip;

unzip config/certs/ca.zip -d config/certs;

fi;

if [ ! -f config/certs/certs.zip ]; then

echo "Creating certs";

echo -ne \

"instances:\n"\

" - name: es01\n"\

" dns:\n"\

" - es01\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es02\n"\

" dns:\n"\

" - es02\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

" - name: es03\n"\

" dns:\n"\

" - es03\n"\

" - localhost\n"\

" ip:\n"\

" - 127.0.0.1\n"\

> config/certs/instances.yml;

bin/elasticsearch-certutil cert --silent --pem -out config/certs/certs.zip --in config/certs/instances.yml --ca-cert config/certs/ca/ca.crt --ca-key config/certs/ca/ca.key;

unzip config/certs/certs.zip -d config/certs;

fi;

echo "Setting file permissions"

chown -R root:root config/certs;

find . -type d -exec chmod 750 \{\} \;;

find . -type f -exec chmod 640 \{\} \;;

echo "Waiting for Elasticsearch availability";

until curl -s --cacert config/certs/ca/ca.crt https://es01:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST --cacert config/certs/ca/ca.crt -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" https://es01:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

echo "All done!";

'

healthcheck:

test: ["CMD-SHELL", "[ -f config/certs/es01/es01.crt ]"]

interval: 1s

timeout: 5s

retries: 120

es01:

depends_on:

setup:

condition: service_healthy

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata01:/usr/share/elasticsearch/data

ports:

- ${ES_PORT}:9200

environment:

- node.name=es01

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es02,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es01/es01.key

- xpack.security.http.ssl.certificate=certs/es01/es01.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es01/es01.key

- xpack.security.transport.ssl.certificate=certs/es01/es01.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

- xpack.ml.use_auto_machine_memory_percent=true

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es02:

depends_on:

- es01

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata02:/usr/share/elasticsearch/data

environment:

- node.name=es02

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es03

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es02/es02.key

- xpack.security.http.ssl.certificate=certs/es02/es02.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es02/es02.key

- xpack.security.transport.ssl.certificate=certs/es02/es02.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

- xpack.ml.use_auto_machine_memory_percent=true

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

es03:

depends_on:

- es02

image: docker.elastic.co/elasticsearch/elasticsearch:${STACK_VERSION}

volumes:

- certs:/usr/share/elasticsearch/config/certs

- esdata03:/usr/share/elasticsearch/data

environment:

- node.name=es03

- cluster.name=${CLUSTER_NAME}

- cluster.initial_master_nodes=es01,es02,es03

- discovery.seed_hosts=es01,es02

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- bootstrap.memory_lock=true

- xpack.security.enabled=true

- xpack.security.http.ssl.enabled=true

- xpack.security.http.ssl.key=certs/es03/es03.key

- xpack.security.http.ssl.certificate=certs/es03/es03.crt

- xpack.security.http.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.enabled=true

- xpack.security.transport.ssl.key=certs/es03/es03.key

- xpack.security.transport.ssl.certificate=certs/es03/es03.crt

- xpack.security.transport.ssl.certificate_authorities=certs/ca/ca.crt

- xpack.security.transport.ssl.verification_mode=certificate

- xpack.license.self_generated.type=${LICENSE}

- xpack.ml.use_auto_machine_memory_percent=true

mem_limit: ${MEM_LIMIT}

ulimits:

memlock:

soft: -1

hard: -1

healthcheck:

test:

[

"CMD-SHELL",

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

volumes:

- certs:/usr/share/kibana/config/certs

- kibanadata:/usr/share/kibana/data

- kibanaconfig:/usr/share/kibana/config

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- I18N_LOCALE=zh-CN

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

volumes:

certs:

driver: local

esdata01:

driver: local

esdata02:

driver: local

esdata03:

driver: local

kibanadata:

driver: local

kibanaconfig:3. 启动服务

[root@elk92 elasticsearch]# docker-compose up -d

[+] Building 0.0s (0/0) docker:default

[+] Running 11/11

✔ Network elasticsearch_default Created 0.1s

✔ Volume "elasticsearch_esdata03" Created 0.0s

✔ Volume "elasticsearch_kibanadata" Created 0.0s

✔ Volume "elasticsearch_certs" Created 0.0s

✔ Volume "elasticsearch_esdata01" Created 0.0s

✔ Volume "elasticsearch_esdata02" Created 0.0s

✔ Container elasticsearch-setup-1 Healthy 0.1s

✔ Container elasticsearch-es01-1 Healthy 0.0s

✔ Container elasticsearch-es02-1 Healthy 0.0s

✔ Container elasticsearch-es03-1 Healthy 0.0s

✔ Container elasticsearch-kibana-1 Started 0.0s 4. 再次查看服务状态

[root@elk92 elasticsearch]# docker-compose ps -a

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

elasticsearch-es01-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" es01 2 minutes ago Up 2 minutes (healthy) 9300/tcp, 0.0.0.0:19200->9200/tcp, :::19200->9200/tcp

elasticsearch-es02-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" es02 2 minutes ago Up 2 minutes (healthy) 9200/tcp, 9300/tcp

elasticsearch-es03-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" es03 2 minutes ago Up 2 minutes (healthy) 9200/tcp, 9300/tcp

elasticsearch-kibana-1 docker.elastic.co/kibana/kibana:8.17.3 "/bin/tini -- /usr/l…" kibana 2 minutes ago Up 25 seconds (health: starting) 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp

elasticsearch-setup-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" setup 2 minutes ago Exited (0) 14 seconds ago 5. 访问 Kibana 的 Web UI

通过浏览器访问以下地址:

http://10.0.0.92:5601/6.基于环境变量的方式指定 Kibana 的配置

引用变量,配置kibana的配置信息

1. 环境准备

.env文件内容:

# Password for the 'elastic' user (at least 6 characters)

ELASTIC_PASSWORD=123456

# Password for the 'kibana_system' user (at least 6 characters)

KIBANA_PASSWORD=123456

# Version of Elastic products

# STACK_VERSION={version}

STACK_VERSION=8.17.3

# Set the cluster name

# CLUSTER_NAME=docker-cluster

CLUSTER_NAME=docker-com-es8

# Set to 'basic' or 'trial' to automatically start the 30-day trial

LICENSE=basic

#LICENSE=trial

# Port to expose Elasticsearch HTTP API to the host

# ES_PORT=9200

ES_PORT=19200

#ES_PORT=127.0.0.1:9200

# Port to expose Kibana to the host

KIBANA_PORT=5601

I18N_LOCALE=zh-CN # 在env里增加一共变量,在下面文件引用

#KIBANA_PORT=80

# Increase or decrease based on the available host memory (in bytes)

MEM_LIMIT=1073741824

# Project namespace (defaults to the current folder name if not set)

#COMPOSE_PROJECT_NAME=myprojectdocker-compose.yaml文件内容:

...............

"curl -s --cacert config/certs/ca/ca.crt https://localhost:9200 | grep -q 'missing authentication credentials'",

]

interval: 10s

timeout: 10s

retries: 120

kibana:

depends_on:

es01:

condition: service_healthy

es02:

condition: service_healthy

es03:

condition: service_healthy

image: docker.elastic.co/kibana/kibana:${STACK_VERSION}

volumes:

- certs:/usr/share/kibana/config/certs

- kibanadata:/usr/share/kibana/data

ports:

- ${KIBANA_PORT}:5601

environment:

- SERVERNAME=kibana

- I18N_LOCALE=${I18N_LOCALE} # 引用上面我们自己加的变量,配置kibana

- ELASTICSEARCH_HOSTS=https://es01:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

- ELASTICSEARCH_SSL_CERTIFICATEAUTHORITIES=config/certs/ca/ca.crt

mem_limit: ${MEM_LIMIT}

healthcheck:

test:

[

"CMD-SHELL",

"curl -s -I http://localhost:5601 | grep -q 'HTTP/1.1 302 Found'",

]

interval: 10s

timeout: 10s

retries: 120

volumes:

certs:

driver: local

esdata01:

driver: local

esdata02:

driver: local

esdata03:

driver: local

kibanadata:

driver: local2. 启动服务

[root@elk92 elasticsearch]# docker-compose up -d

[+] Building 0.0s (0/0) docker:default

[+] Running 6/6

✔ Network elasticsearch_default Created 0.1s

✔ Container elasticsearch-setup-1 Healthy 0.0s

✔ Container elasticsearch-es01-1 Healthy 0.0s

✔ Container elasticsearch-es02-1 Healthy 0.0s

✔ Container elasticsearch-es03-1 Healthy 0.0s

✔ Container elasticsearch-kibana-1 Started 0.0s

[root@elk92 elasticsearch]#

[root@elk92 elasticsearch]#

[root@elk92 elasticsearch]# docker-compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

elasticsearch-es01-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" es01 3 minutes ago Up 3 minutes (healthy) 9300/tcp, 0.0.0.0:19200->9200/tcp, :::19200->9200/tcp

elasticsearch-es02-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" es02 3 minutes ago Up 3 minutes (healthy) 9200/tcp, 9300/tcp

elasticsearch-es03-1 docker.elastic.co/elasticsearch/elasticsearch:8.17.3 "/bin/tini -- /usr/l…" es03 3 minutes ago Up 3 minutes (healthy) 9200/tcp, 9300/tcp

elasticsearch-kibana-1 docker.elastic.co/kibana/kibana:8.17.3 "/bin/tini -- /usr/l…" kibana 3 minutes ago Up About a minute (healthy) 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp

[root@elk92 elasticsearch]# 3. 访问测试

略

Docker 远程仓库管理

官方仓库管理

- 链接:https://hub.docker.com/

第三方仓库管理

- 阿里云

- 腾讯云

- 华为云

- ……

自建仓库管理

- registry:开源、轻量级,不特别占用资源,适合学习使用。

- harbor:由 vmware 公司基于 registry 二次开发,有丰富的 WebUI,支持 RBAC 权限控制、高可用方案等,易于管理。

registry 私有仓库的快速入门案例

1. 部署 registry

[root@elk92 ~]# docker run -d -p 5000:5000 --restart always --name registry registry:2.8.3

Unable to find image 'registry:2.8.3' locally

2.8.3: Pulling from library/registry

44cf07d57ee4: Already exists

bbbdd6c6894b: Pull complete

8e82f80af0de: Pull complete

3493bf46cdec: Pull complete

6d464ea18732: Pull complete

Digest: sha256:a3d8aaa63ed8681a604f1dea0aa03f100d5895b6a58ace528858a7b332415373

Status: Downloaded newer image for registry:2.8.3

9405a9722d8760681e79266fea014580bcc1bf22506f682a37a85925c147cac2

[root@elk92 ~]# 2. 访问 registry 的 WebUI

- 链接:http://10.0.0.92:5000/v2/_catalog

输出{“repositories”:[]} ,[]内就是镜像

3. 推送镜像

如果不是https访问,会报错。更改忽略证书认证即可/etc/docker/daemon.json

[root@elk93 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.92:5000"]

}

[root@elk93 ~]#

[root@elk93 ~]# systemctl restart docker

[root@elk93 ~]#

[root@elk93 ~]# docker info | grep "Insecure Registries" -A 2

Insecure Registries:

10.0.0.92:5000

127.0.0.0/8

[root@elk93 ~]#

[root@elk93 ~]# # 先打标签

[root@elk93 ~]# docker image tag nginx:1.27.4-alpine 10.0.0.92:5000/com-web/nginx:1.27.4-alpine

[root@elk93 ~]# # 然后推动到私有仓库,push

[root@elk93 ~]# docker image push 10.0.0.92:5000/com-web/nginx:1.27.4-alpine

The push refers to repository [10.0.0.92:5000/com-web/nginx]

c18897d5e3dd: Pushed

9af9e76ea07f: Pushed

f1f70b13aacc: Pushed

252b6db79fae: Pushed

c9ce8cb4e76a: Pushed

8f3c313eb124: Pushed

c1761f3c364a: Pushed

08000c18d16d: Pushed

1.27.4-alpine: digest: sha256:ebee5f752c662a314744c6e1e6ba57f10f15a98f5c797872e0505a1dbfa56720 size: 1989

[root@elk93 ~]# 4. 再次访问测试

- 链接:http://10.0.0.92:5000/v2/_catalog {“repositories”:[“root/alpine”]}

5. 客户端拉取镜像仓库

[root@elk91 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.92:5000"]

}

[root@elk91 ~]#

[root@elk91 ~]# systemctl restart docker.service

[root@elk91 ~]#

[root@elk91 ~]# docker info | grep "Insecure Registries" -A 2

Insecure Registries:

10.0.0.92:5000

127.0.0.0/8

[root@elk91 ~]#

[root@elk91 ~]# docker image pull 10.0.0.92:5000/com-web/nginx:1.27.4-alpine

1.27.4-alpine: Pulling from com-web/nginx

f18232174bc9: Pull complete

ccc35e35d420: Pull complete

43f2ec460bdf: Pull complete

984583bcf083: Pull complete

8d27c072a58f: Pull complete

ab3286a73463: Pull complete

6d79cc6084d4: Pull complete

0c7e4c092ab7: Pull complete

Digest: sha256:ebee5f752c662a314744c6e1e6ba57f10f15a98f5c797872e0505a1dbfa56720

Status: Downloaded newer image for 10.0.0.92:5000/com-web/nginx:1.27.4-alpine

10.0.0.92:5000/com-web/nginx:1.27.4-alpine

[root@elk91 ~]#

[root@elk91 ~]# docker image ls 10.0.0.92:5000/com-web/nginx

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.92:5000/com-web/nginx 1.27.4-alpine 1ff4bb4faebc 6 weeks ago 47.9MB

[root@elk91 ~]# 6. 回收 registry 的镜像

删除没用,要使用registry garbage-collect /etc/docker/registry/config.yml 回收磁盘资源

[root@elk92 elasticsearch]# docker exec -it registry sh

/ # du -sh /var/lib/registry/docker/registry/v2/*

20.0M /var/lib/registry/docker/registry/v2/blobs

148.0K /var/lib/registry/docker/registry/v2/repositories

/ #

/ # rm -rf /var/lib/registry/docker/registry/v2/repositories/com-web/

/ #

/ # du -sh /var/lib/registry/docker/registry/v2/*

20.0M /var/lib/registry/docker/registry/v2/blobs

4.0K /var/lib/registry/docker/registry/v2/repositories

/ #

/ # registry garbage-collect /etc/docker/registry/config.yml

0 blobs marked, 10 blobs and 0 manifests eligible for deletion

blob eligible for deletion: sha256:8d27c072a58f81ecf2425172ac0e5b25010ff2d014f89de35b90104e462568eb

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/8d/8d27c072a58f81ecf2425172ac0e5b25010ff2d014f89de35b90104e462568eb go.version=go1.20.8 instance.id=a247b4da-0017-48d0-a874-a2e8ff5aa4eb service=registry

blob eligible for deletion: sha256:984583bcf083fa6900b5e7834795a9a57a9b4dfe7448d5350474f5d309625ece

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/98/984583bcf083fa6900b5e7834795a9a57a9b4dfe7448d5350474f5d309625ece go.version=go1.20.8 instance.id=a247b4da-0017-48d0-a874-a2e8ff5aa4eb service=registry

blob eligible for deletion: sha256:ccc35e35d420d6dd115290f1074afc6ad1c474b6f94897434e6befd33be781d2

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/cc/ccc35e35d420d6dd115290f1074afc6ad1c474b6f94897434e6befd33be781d2 go.version=go1.20.8 instance.id=a247b4da-0017-48d0-a874-a2e8ff5aa4eb service=registry

blob eligible for deletion: sha256:ebee5f752c662a314744c6e1e6ba57f10f15a98f5c797872e0505a1dbfa56720

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/eb/ebee5f752c662a314744c6e1e6ba57f10f15a98f5c797872e0505a1dbfa56720 go.version=go1.20.8 instance.id=a247b4da-0017-48d0-a874-a2e8ff5aa4eb service=registry

blob eligible for deletion: sha256:f18232174bc91741fdf3da96d85011092101a032a93a388b79e99e69c2d5c870

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/1f/1ff4bb4faebcfb1f7e01144fa9904a570ab9bab88694457855feb6c6bba3fa07 go.version=go1.20.8 instance.id=a247b4da-0017-48d0-a874-a2e8ff5aa4eb service=registry

blob eligible for deletion: sha256:ab3286a7346303a31b69a5189f63f1414cc1de44e397088dcd07edb322df1fe9

INFO[0000] Deleting blob: /docker/registry/v2/blobs/sha256/ab/ab3286a7346303a31b69a5189f63f1414cc1de44e397088dcd07edb322df1fe9 go.version=go1.20.8 instance.id=a247b4da-0017-48d0-a874-a2e8ff5aa4eb service=registry

/ #

/ #

/ # du -sh /var/lib/registry/docker/registry/v2/*

48.0K /var/lib/registry/docker/registry/v2/blobs

4.0K /var/lib/registry/docker/registry/v2/repositories

/ # PS:注意和总结

registry 的 bug

如果已经删除的镜像重新推送到 registry 时,需要重启 registry 容器后才能再次推送!

总结

registry 没有认证功能,webUI 功能单一,且重复推送镜像时存在问题。所以生产环境中,尽量不要直接使用该镜像,而是使用 harbor 仓库部署企业级仓库。具体演示略

常见的报错

[root@elk93 ~]# docker image push 10.0.0.92:5000/com-web/nginx:1.27.4-alpine

The push refers to repository [10.0.0.92:5000/com-web/nginx]

Get "https://10.0.0.92:5000/v2/": http: server gave HTTP response to HTTPS client

[root@elk93 ~]# 问题原因

默认 docker 基于 https 的方式推送,此处我们的私有仓库是 http 协议,因此要配置远程仓库的信息为不安全的。

解决方案

[root@elk93 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.92:5000"]

}

[root@elk93 ~]#

[root@elk93 ~]# systemctl restart docker彩蛋:服务器翻墙

1. 配置代理

全局变量。

[root@elk92 ~]# export http_proxy=http://10.0.0.1:7890

[root@elk92 ~]# export https_proxy=http://10.0.0.1:7890

[root@elk92 ~]# env | grep -i proxy

https_proxy=http://10.0.0.1:7890

http_proxy=http://10.0.0.1:7890

[root@elk92 ~]# 2. 测试验证

[root@elk92 ~]# curl www.google.com部署 harbor 仓库及基本使用实战

1. 下载 harbor

wget https://github.com/goharbor/harbor/releases/download/v2.12.2/harbor-offline-installer-v2.12.2.tgz2. 解压 harbor 目录

[root@elk93 ~]# tar xf harbor-offline-installer-v2.12.2.tgz -C /usr/local/3. 准备配置文件

[root@elk93 ~]# ll /usr/local/harbor/

total 636508

drwxr-xr-x 2 root root 4096 Mar 24 16:46 ./

drwxr-xr-x 19 root root 4096 Mar 24 16:46 ../

-rw-r--r-- 1 root root 3646 Jan 16 22:10 common.sh

-rw-r--r-- 1 root root 651727378 Jan 16 22:11 harbor.v2.12.2.tar.gz

-rw-r--r-- 1 root root 14288 Jan 16 22:10 harbor.yml.tmpl

-rwxr-xr-x 1 root root 1975 Jan 16 22:10 install.sh*

-rw-r--r-- 1 root root 11347 Jan 16 22:10 LICENSE

-rwxr-xr-x 1 root root 2211 Jan 16 22:10 prepare*

[root@elk93 ~]#

[root@elk93 ~]# cp /usr/local/harbor/harbor.yml{.tmpl,}

[root@elk93 ~]#

[root@elk93 ~]# ll /usr/local/harbor/harbor.yml*

-rw-r--r-- 1 root root 14288 Mar 24 16:46 /usr/local/harbor/harbor.yml

-rw-r--r-- 1 root root 14288 Jan 16 22:10 /usr/local/harbor/harbor.yml.tmpl

[root@elk93 ~]# 编辑配置文件:

[root@elk93 ~]# vim /usr/local/harbor/harbor.yml

...

hostname: 10.0.0.93

...

## https related config

#https:

# # https port for harbor, default is 443

# port: 443

# # The path of cert and key files for nginx

# certificate: /your/certificate/path

# private_key: /your/private/key/path

# # enable strong ssl ciphers (default: false)

# # strong_ssl_ciphers: false

...

harbor_admin_password: 1

...

data_volume: /com/data/harbor

...4. 开始安装 harbor

[root@elk93 ~]# /usr/local/harbor/install.sh

[Step 0]: checking if docker is installed ...

Note: docker version: 20.10.24

[Step 2]: loading Harbor images ...

...

[Step 5]: starting Harbor ...

[+] Building 0.0s (0/0) docker:default

[+] Running 10/10

✔ Network harbor_harbor Created 0.1s

✔ Container harbor-log Started 0.0s

✔ Container harbor-jobservice Started 0.0s

✔ Container nginx Started 0.0s

✔ ----Harbor has been installed and started successfully.----

[root@elk93 ~]# 5. 访问 harbor 的 WebUI

http://10.0.0.93/harbor/projects用户名: admin

密码: 1

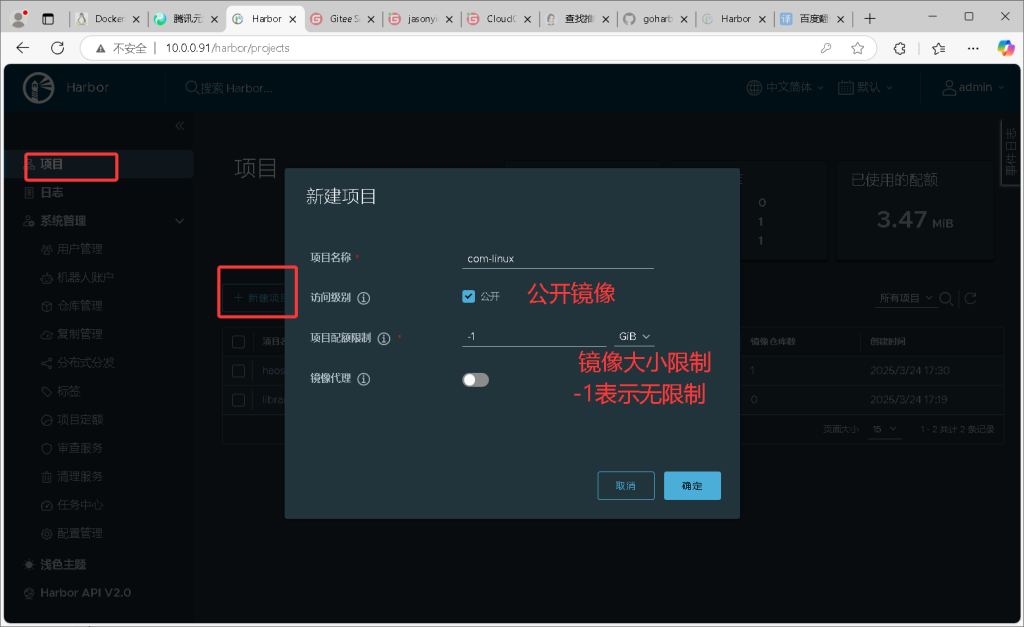

6. 新建项目

建议命名: “com-linux”

7. 推送镜像到 harbor 仓库

注意:一定要登录harbor才能推送,使用docker login登录。

注意:推送完一定要退出,因为账号密码保存在/root/.docker/config.json里面,退出才能清除,使用docker logout退出

[root@elk92 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.92:5000","10.0.0.93"]

}

[root@elk92 ~]#

[root@elk92 ~]# systemctl restart docker.service

[root@elk92 ~]#

[root@elk92 ~]# docker info | grep "Insecure Registries" -A 2

Insecure Registries:

10.0.0.92:5000

10.0.0.93

[root@elk92 ~]#

[root@elk92 ~]# docker tag com-linux:v2.15 10.0.0.93/com-linux/com-linux:v2.15

[root@elk92 ~]#

[root@elk92 ~]# docker login -u admin -p 1 10.0.0.93 # 基于命令行的方式登录,如果你想要交互的话,就不用写-u和-p。

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@elk92 ~]#

[root@elk92 ~]# cat /root/.docker/config.json;echo

{

"auths": {

"10.0.0.93": {

"auth": "YWRtaW46MQ=="

}

}

}

[root@elk92 ~]#

[root@elk92 ~]# echo YWRtaW46MQ== | base64 -d;echo

admin:1

[root@elk92 ~]#

[root@elk92 ~]# docker push 10.0.0.93/com-linux/com-linux:v2.15

The push refers to repository [10.0.0.93/com-linux/com-linux]

767c14dc78c4: Pushed

8855adb3b2f9: Pushed

51a0299acc89: Pushed

ebe19e8e2e2a: Pushed

c2b3b3e0dfdd: Pushed

059f5b7d2971: Pushed

08000c18d16d: Pushed

v2.15: digest: sha256:3e426de7fe466de0c975c279d3ac4e0bf07b869d4fe7b5cc43c7107bba75de72 size: 1782

[root@elk92 ~]#

[root@elk92 ~]# docker logout 10.0.0.93 # 操作完成后一定要退出登录

Removing login credentials for 10.0.0.93

[root@elk92 ~]#

[root@elk92 ~]# cat /root/.docker/config.json;echo

{

"auths": {}

}

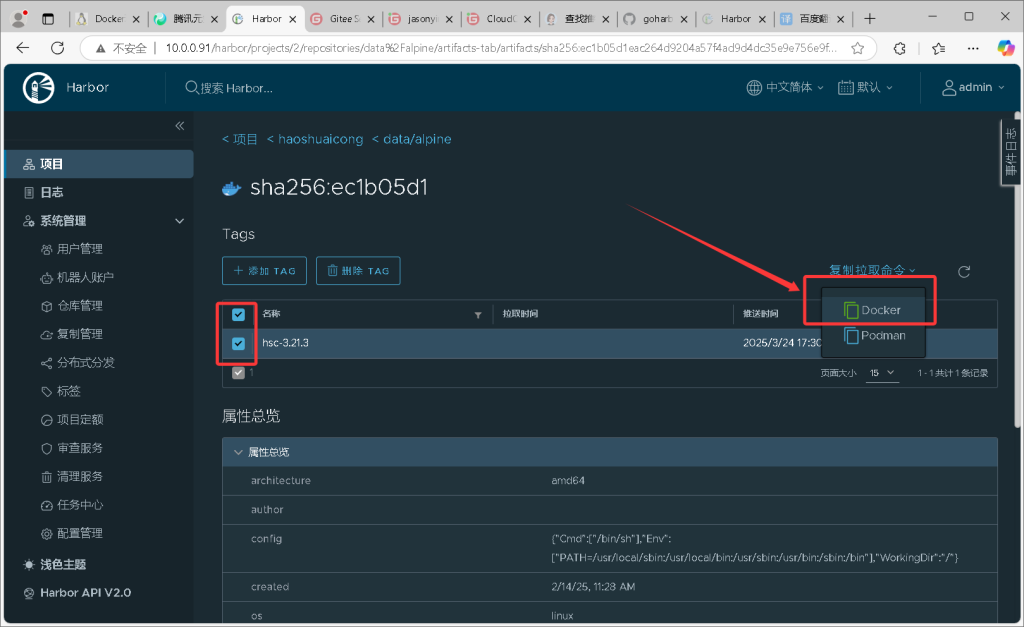

[root@elk92 ~]# 8. 从 harbor 拉取镜像案例

拉取命令,看下图

[root@elk91 ~]# cat /etc/docker/daemon.json

{

"insecure-registries": ["10.0.0.92:5000","10.0.0.93"]

}

[root@elk91 ~]#

[root@elk91 ~]# systemctl restart docker.service

[root@elk91 ~]#

[root@elk91 ~]# docker info | grep "Insecure Registries" -A 2

Insecure Registries:

10.0.0.92:5000

10.0.0.93

[root@elk91 ~]#

[root@elk91 ~]# docker pull 10.0.0.93/com-linux/com-linux:v2.15

v2.15: Pulling from com-linux/com-linux

f18232174bc9: Already exists

fc49d2e4a785: Pull complete

7dd305ee43a4: Pull complete

e36b2eaa6a94: Pull complete

b67fecf1bbf8: Pull complete

76e5593f66e2: Pull complete

fb18e9be5a61: Pull complete

Digest: sha256:3e426de7fe466de0c975c279d3ac4e0bf07b869d4fe7b5cc43c7107bba75de72

Status: Downloaded newer image for 10.0.0.93/com-linux/com-linux:v2.15

10.0.0.93/com-linux/com-linux:v2.15

[root@elk91 ~]#

[root@elk91 ~]#

[root@elk91 ~]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

10.0.0.93/com-linux/com-linux v2.15 7868472fa986 3 days ago 34.6MB

10.0.0.92:5000/com-web/nginx 1.27.4-alpine 1ff4bb4faebc 6 weeks ago 47.9MB

[root@elk91 ~]#

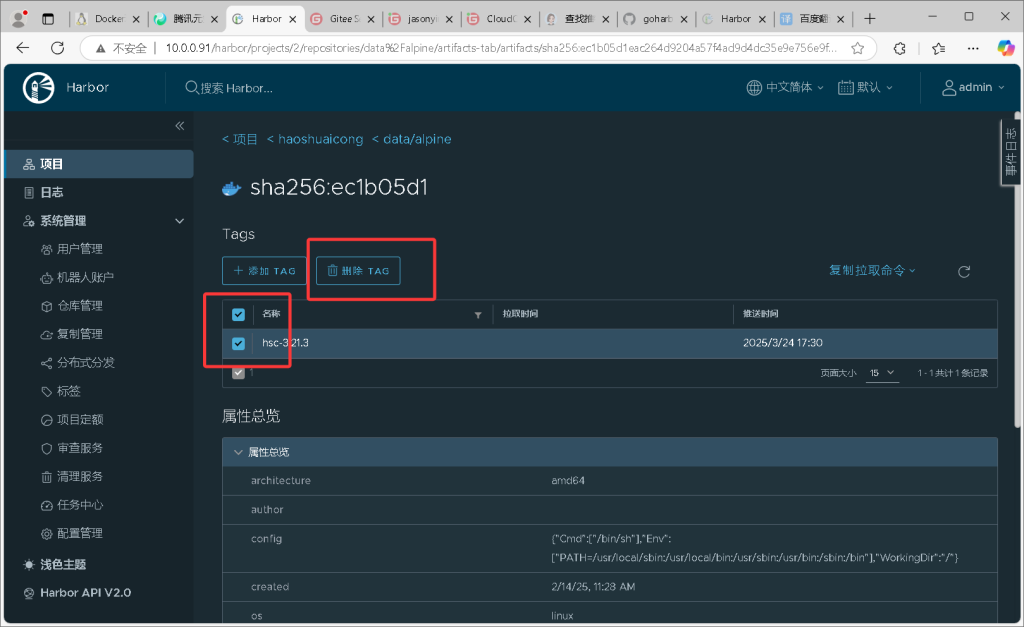

9. 移除镜像

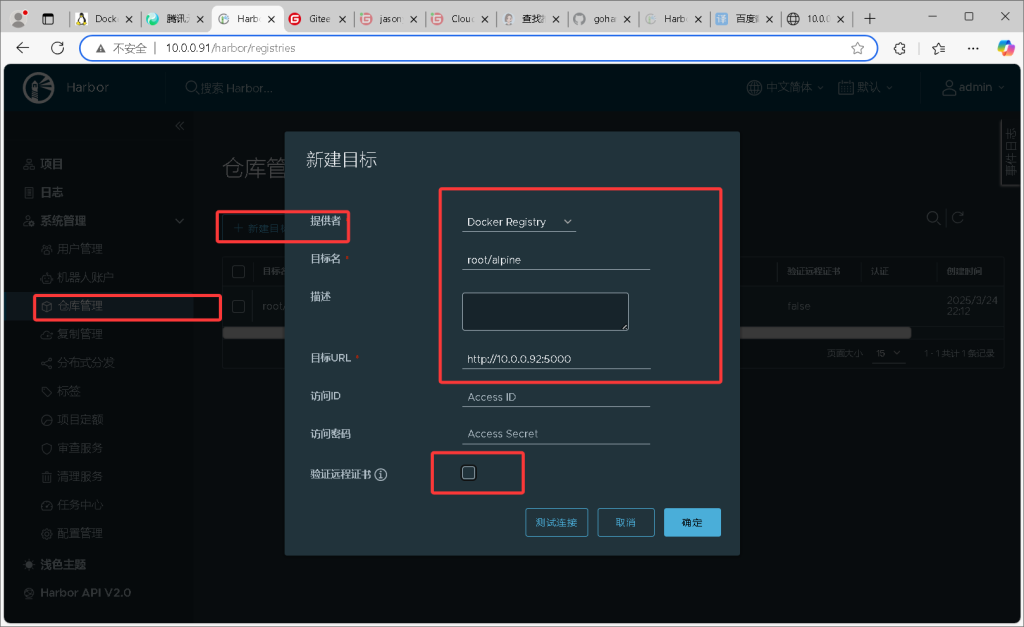

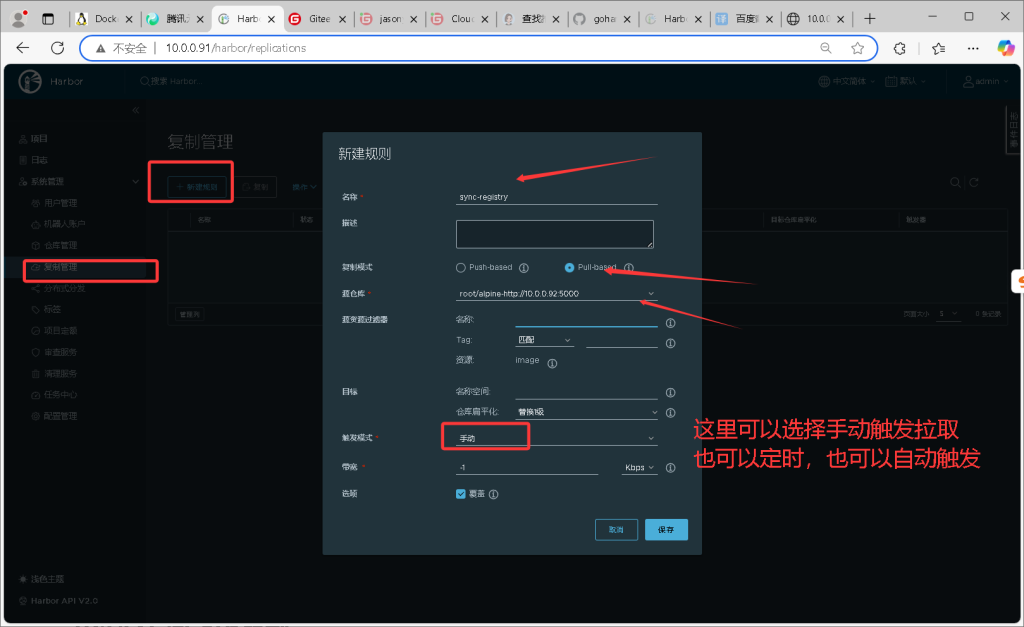

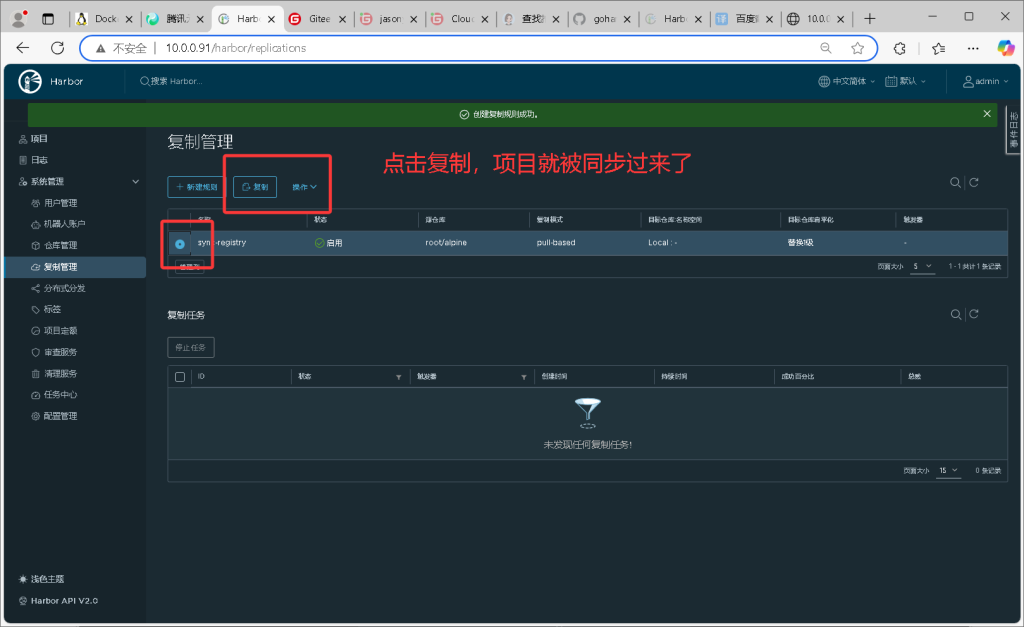

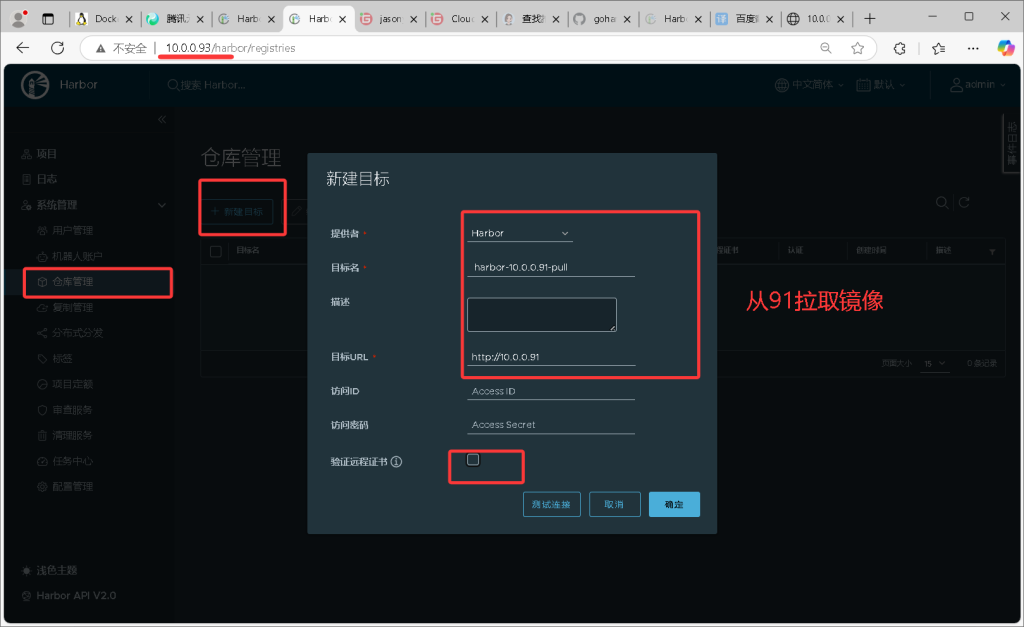

docker-registry 迁移至 harbor 仓库

提供者:registry

迁移项目名字、registry的地址、如果没有ssl不要勾选证书验证

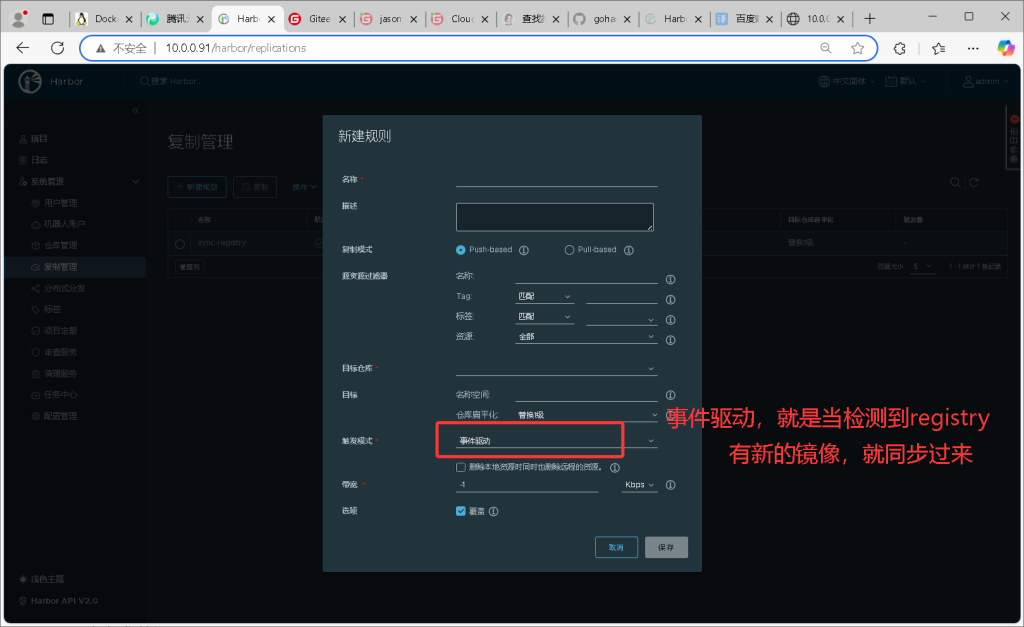

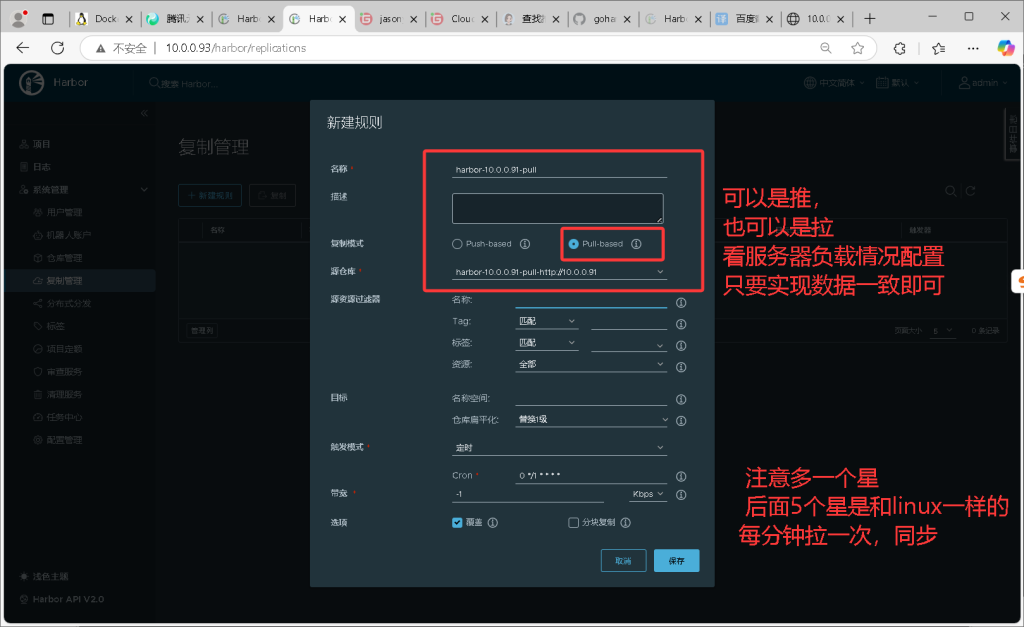

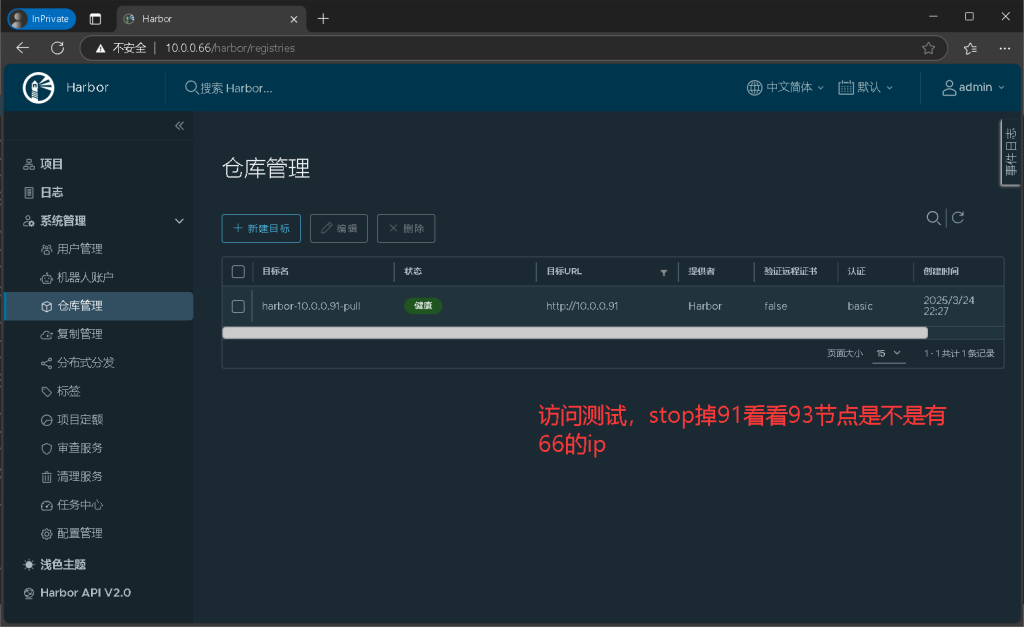

harbor 高可用实战

1.在93节点也部署harbor

…….略

2.两种方案

- 使用nfs共享存储,挂载同一挂载点

- 使用harbor定时推动和拉取镜像,实现数据同步,然后使用keepalived实现高可用

3.配置互相拉取镜像

- 91也配置拉取93的镜像,同时相互拉取,注意如果是私有项目,需要在创建仓库时配置上账号密码1821图片

4.配置keepalived

# 91配置为主节点,vip为10.0.0.66

[root@elk91 keepalived]# cat /etc/keepalived/keepalived.conf

global_defs { #全局配置

router_id harbor-node1 #标识身份->名称

}

vrrp_instance VI_1 {

state MASTER #标识角色状态

interface eth0 #网卡绑定接口

virtual_router_id 50 #虚拟路由id,50小组

priority 150 #优先级

advert_int 1 #监测间隔时间

authentication { #认证,同一50小组

auth_type PASS #认证方式

auth_pass 1111 #认证密码

}

virtual_ipaddress {

10.0.0.66 #虚拟的VIP地址

}

}

[root@elk91 keepalived]#

# 93备份节点

[root@elk93 harbor]# cat /etc/keepalived/keepalived.conf

global_defs { #全局配置

router_id harbor-node2 #标识身份->名称

}

vrrp_instance VI_1 {

state BACKUP #标识角色状态

interface eth0 #网卡绑定接口

virtual_router_id 50 #虚拟路由id

priority 100 #优先级

advert_int 1 #监测间隔时间

authentication { #认证

auth_type PASS #认证方式

auth_pass 1111 #认证密码

}

virtual_ipaddress {

10.0.0.66 #虚拟的VIP地址

}

}

[root@elk93 harbor]#

总结

- Dockerfile的优化思路

- 编译速度:

- .dockerignore

- 软件源

- 可以将不方便下载的文件存放在本地使用COPY命令

- 合并多条Dockerfile指令

- 将不经常修改的指令往上放,充分利用缓存

- 镜像大小

- 使用较小的基础镜像,比如alpine

- 删除无用的缓存或安装包

- 可以使用多阶段构建

- docker-compose

- 单机编排神器,可以实现一组容器的管理。

常见的应用:

- ZABBIX

- WORDPRESS

- ELASTICSEARCH

- KIBANA

- docker registry的基本使用

- harbor仓库的部署及基本使用

- docker registry 迁移到harbor仓库

- harbor仓库的高可用方案

可做项目:

- 完成所有练习整理思维导图;

- 部署harbor高可用

- 部署dify

https://github.com/langgenius/dify/tree/main/docker

- 将dify和deepseek-r1:7b集成。

- 搭建本地知识库